Microsoft Just Made a Quiet Move with Loud Consequences

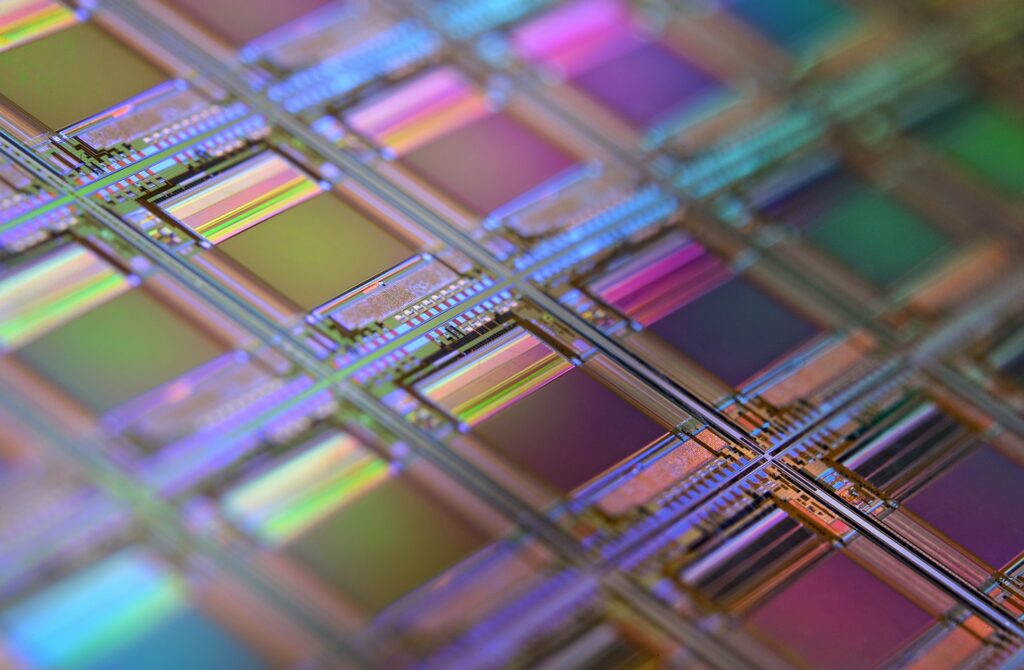

Instead of chasing benchmark headlines or model-size bragging rights, Microsoft introduced a cost-efficient AI chip designed to solve a more uncomfortable problem:

AI infrastructure economics are shifting, and inference costs now dominate cloud spending.

This new Microsoft AI chip is not about winning performance charts.

It is about reducing AI inference cost, lowering power consumption, and restoring margin discipline across cloud infrastructure.

And that shift may reshape AI product design, cloud economics, and creative workflows far more than another record-breaking model ever could.

Why Microsoft Built a Cost-Efficient AI Chip

For years, AI progress came with an unspoken tax.

Larger models required:

- More compute

- More electricity

- More cooling

- More expensive third-party hardware

Cloud providers absorbed the cost at first.

Then cloud customers felt it.

Now the entire ecosystem is feeling margin pressure.

Microsoft’s response is pragmatic.

This custom AI silicon is engineered to lower cost per inference, not just peak performance.

In today’s AI economy, cost per AI operation matters more than raw speed.

This marks a shift from:

- Maximal compute

to - Optimal efficiency

In other words: good enough acceleration at massive scale.

AI Inference vs Training: The Real Cost Battlefield

Most AI discussions still obsess over training.

That’s a mistake.

The real economic pressure comes from AI inference workloads:

- Chat responses

- Search queries

- Copilot suggestions

- Repeated micro-tasks executed millions of times per day

Inference is where AI usage explodes — and where costs quietly compound.

Microsoft’s AI chip is optimized for:

- Repetition

- Predictability

- Efficiency per watt

- Thermal stability in large data centers

This is AI infrastructure designed for everyday usage, not one-off demos.

Energy Efficiency Is Now an AI Design Constraint

Electricity has become one of AI’s biggest bottlenecks.

So has cooling.

Modern data centers are:

- Hot

- Power-hungry

- Expensive to operate

Microsoft’s chip design reflects a new reality:

thermal efficiency is a business decision, not a sustainability slogan.

Lower power draw means:

- Lower operating costs

- Higher deployment density

- Fewer infrastructure bottlenecks

This nudges engineering culture away from gambling on brute force and toward architectural discipline.

Every milliwatt counts.

Owning AI Silicon Changes Cloud Economics

There’s another layer here: control.

Relying entirely on external chip vendors means inheriting:

- Their margins

- Their supply constraints

- Their pricing power

By owning more of its AI silicon stack, Microsoft reduces dependency risk, gains leverage in negotiations, and improves Azure AI margin predictability. Custom AI chips turn supply chains into strategic assets.

If your organization is struggling to navigate the shifting cloud costs, you can work with our lab on a strategic AI infrastructure audit to reclaim your margins.

How Cheap AI Changes Product Design

When AI is expensive, products behave cautiously.

Usage is metered.

Features are constrained.

Design decisions are shaped by fear of cost overruns.

When AI inference becomes cheap, something changes.

Designers can assume:

- AI is always on

- Personalization is default

- Micro-interactions can be intelligent

- Feedback loops can be continuous

Cheap AI enables generosity in design.

This directly impacts:

- User experience

- Interface decisions

- Product storytelling

AI stops feeling like a premium feature and starts feeling like invisible infrastructure.

The Cultural Shift: Efficiency Is the New Status Signal

There’s a cultural undertone to Microsoft’s move.

For years, excess compute signaled power.

Now restraint signals intelligence.

Efficiency is no longer boring.

Frugality is no longer weakness.

This AI chip represents a new aesthetic:

- Lean systems

- Elegant constraints

- Sustainable scale

Designers should pay attention.

Constraints spark creativity.

Cheap, reliable AI invites better human-centered design.

What This Means for Startups and Smaller Teams

This shift matters beyond Microsoft.

Lower AI infrastructure costs mean:

- Startups can deploy AI without fear

- Smaller teams can experiment safely

- Innovation decentralizes

When inference costs fall, creativity spreads.

AI stops being gated by capital and starts being shaped by perspective and intent.

That is how ecosystems diversify.

Environmental Impact Without the Marketing Spin

Lower power consumption also means lower emissions.

Microsoft didn’t position this chip as a climate product — but the implication is clear.

Cheaper AI aligns naturally with greener AI.

Sustainability becomes operational, not aspirational.

That matters to:

- Regulators

- Enterprises

- Younger technologists

- Long-term platform builders

Efficiency becomes ethical by default.

Why This Is a Defining AI Operations Moment

This isn’t a flashy consumer product.

You won’t see this chip in a store.

But you’ll feel it:

- Faster AI responses

- Lower API costs

- Fewer usage caps

- AI features quietly appearing everywhere

This is how AI goes mainstream.

Not through spectacle.

Through savings.

Budgets shape culture — and Microsoft just rewrote the budget equation.

The Future of AI Design Is Practical

There is a risk, of course.

Efficiency can slide into conservatism.

Cost discipline can dull ambition.

But Microsoft appears to understand the tradeoff.

Lower infrastructure costs free resources for exploration elsewhere.

This creates a new loop:

- Lower costs → more usage

- More usage → better models

- Better models → smarter tools

- Smarter tools → better design

Not bigger tools.

Better ones.

Final Takeaway

Microsoft’s AI chip is not about winning today’s benchmark wars.

It is about owning tomorrow’s economics.

This moment may look pivotal in hindsight — the point when AI stopped being extravagant and started being practical.

And practicality is what allows AI to disappear into the background, letting human intention take center stage.

The future of AI design is frugal, fluid, and everywhere.

Microsoft just laid the silicon foundation.

The rest of the industry will follow.

Reported by our AI news desk to keep you ahead of the curve.